APA Style

Constantine Andoniou. (2025). Rewiring Education for a Super-Smart Society: Cognitive Integrity, AI Ethics, and the Future of Knowledge. Computing&AI Connect, 2 (Article ID: 0023). https://doi.org/10.69709/CAIC.2025.112122MLA Style

Constantine Andoniou. "Rewiring Education for a Super-Smart Society: Cognitive Integrity, AI Ethics, and the Future of Knowledge". Computing&AI Connect, vol. 2, 2025, Article ID: 0023, https://doi.org/10.69709/CAIC.2025.112122.Chicago Style

Constantine Andoniou. 2025. "Rewiring Education for a Super-Smart Society: Cognitive Integrity, AI Ethics, and the Future of Knowledge." Computing&AI Connect 2 (2025): 0023. https://doi.org/10.69709/CAIC.2025.112122.

ACCESS

Research Article

ACCESS

Research Article

Volume 2, Article ID: 2025.0023

Constantine Andoniou

constantine.andoniou@adu.ac.ae

College of Arts and Sciences, Abu Dhabi University, Khalifa City, Abu Dhabi, P.O. Box 59911, United Arab Emirates

Received: 19 May 2025 Accepted: 01 Sep 2025 Available Online: 03 Sep 2025 Published: 19 Sep 2025

Education systems need to establish methods for protecting cognitive integrity and epistemic trust as artificial intelligence increasingly controls knowledge delivery, educational processes, and institutional decision-making processes. Society 5.0 envisions a human-centered digital civilization in which AI is employed to enhance educational foundations rather than replace them. The proposed Cognitive-AI Interaction Framework (CAIF) serves as a model to study how artificial intelligence transforms educational knowledge production and interpretation processes. The framework uses socio-technical systems (STS) theory together with Ubuntu, Kaitiakitanga, Confucian and Buddhist thoughts, Buen Vivir, and Human Rights principles to identify three epistemic modalities: (a) Epistemic Anchoring, (b) Cognitive Mediation Systems, and (c) Synthetic Knowledge Environments. These modalities illustrate how AI influences trust mechanisms, personalization processes, and the construction of meaning within learning systems. Safeguarding education in Society 5.0 demands both inclusive technology implementation and epistemic governance to maintain ethical design, interpretive transparency, and human cognitive autonomy. The paper recommends strategic outputs to help implement ethical AI systems in curriculum development and policy creation, and pedagogical design.

Education stands at a critical juncture, as our world experiences rapid technological advancement alongside growing uncertainty regarding knowledge. The Japanese government first presented Society 5.0 as a worldwide movement that now drives human-centered super-smart development beyond the information society of Society 4.0. The evolution of society embeds AI with cyber-physical systems and advanced data analytics throughout social and institutional operations. Education serves as a vital component in this transition, as it not only generates knowledge but also shapes values through the application of AI tools and the implementation of learning epistemic protection mechanisms. The shift from earlier digital reforms, which prioritized automation and efficiency, has paved the way for Society 5.0, emphasizing technologies that promote human well-being through ethical design and inclusive knowledge systems [1,2]. Educational processes are increasingly shaped by the fusion of technological resources with traditional teaching and learning methods, supported by institutional authority in knowledge verification and credentialing. The Cognitive-AI Interaction Framework (CAIF) serves to evaluate multiple levels of AI effects on educational systems. The framework shows how AI transforms knowledge systems in educational spaces through three distinct modalities: (a) Epistemic Anchoring, which describes how AI enhances knowledge artifact credibility together with institutional trust and traceability; (b) Cognitive Mediation Systems, which include AI tools that personalize educational content while providing students with learning assistance and behavioral guidance; and (c) Synthetic Knowledge Environments, which consist of generative AI content together with immersive simulations and synthetic instructional agents. The framework (discussed in more detail in Section 3.3) provides a systematic approach for assessing the epistemic effects of AI by examining knowledge preservation and transformation, as well as their impact on educational approaches and assessment methods. The framework draws its foundation from the Socio-Technical Systems (STS) theory, which demonstrates that technological advancement depends on institutional development [3]. CAIF also draws support from multiple worldwide ethical traditions, which provide unique perspectives to develop educational and artificial technologies that respect cultural values and moral principles. The paper establishes CAIF as an analytic method that examines AI implementation in Society 5.0 education through an epistemological framework. The framework defends cognitive integrity by emphasizing that AI implementation in education must protect interpretability, provenance, and reflective agency to achieve sustainability. To this end, strategic recommendations are offered through a series of declarations and policy briefs to support educational institutions and policy decision-makers. The main aim is to integrate AI critically to preserve education as a domain where knowledge sovereignty and ethical learning and collective human purpose thrive. To operationalize this aim, the study adopts a conceptual methodology grounded in framework development and global epistemic traditions.

This conceptual study adopts a theory synthesis and integrative framework development approach. The Cognitive-AI Interaction Framework (CAIF) was developed by integrating socio-technical systems (STS) theory with a range of ethical and philosophical traditions from various global contexts. The process entailed a structured review of interdisciplinary literature, relevant policy frameworks, and ethical codes, encompassing documents from UNESCO, the European Commission, national governments, and Indigenous knowledge traditions. This methodology supports the construction of a normative and analytic framework for evaluating AI’s impact on education.

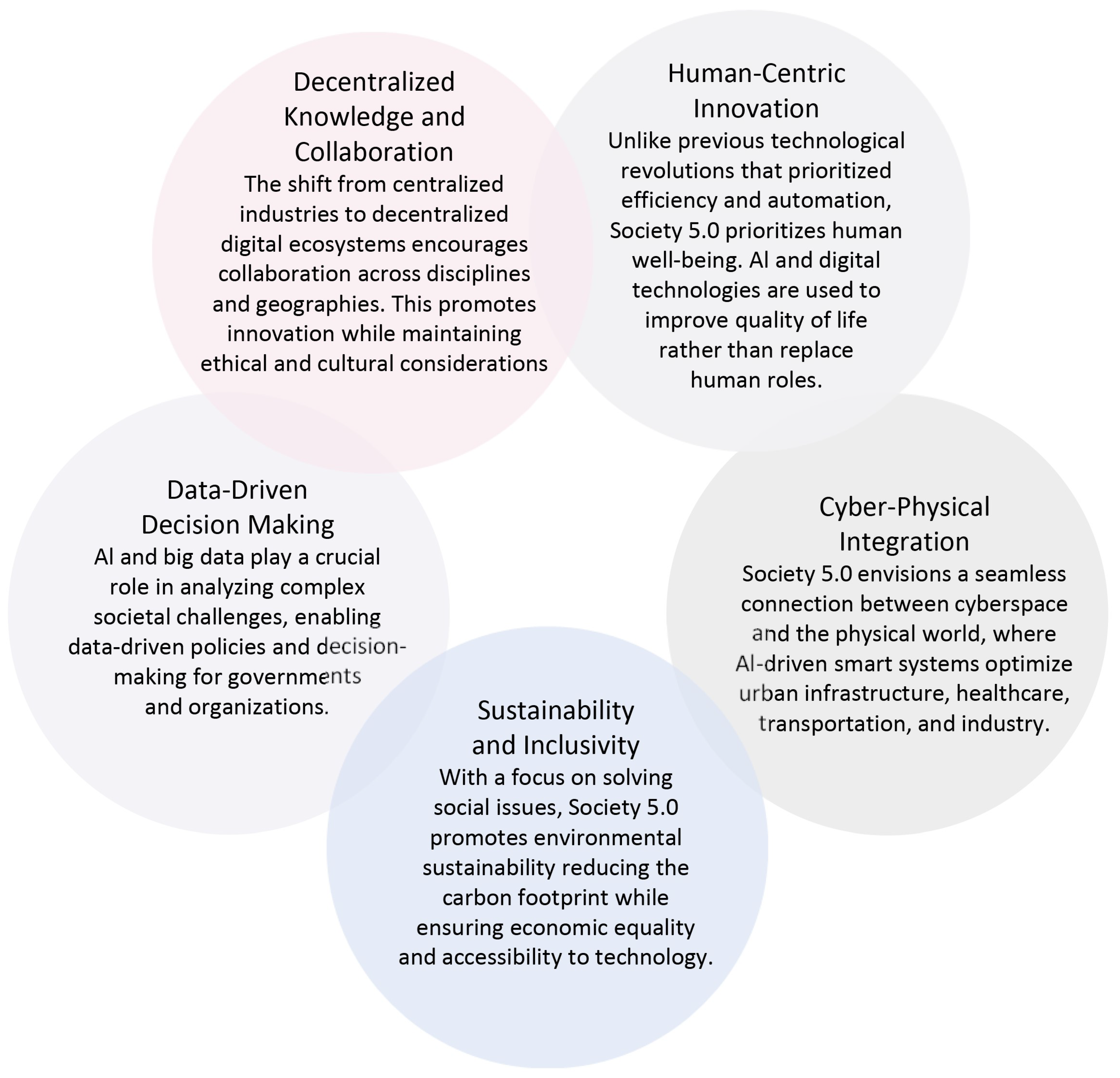

3.1. The Evolution Toward Society 5.0 Societal development can be understood as a sequence of stages, each defined by the organization of knowledge, labor, and value. Society 1.0 operated as a hunter-gatherer system with oral knowledge transmission. Society 2.0 introduced agrarian communities and spiritual institutions. Society 3.0 emerged with industrialization and standardized schooling, while Society 4.0 brought global connectivity and digital information systems (Figure 1). The proposed super-smart society of Society 5.0 merges intelligent infrastructure with human-centered values [1,2]. The evolution from Society 4.0 to Society 5.0 represents an epistemological transformation that surpasses technological advancement. Knowledge transforms in terms of its origins, verification processes, and methods of interpretation. This development has profound effects on educational systems. The educational system of Society 5.0 needs to transform its knowledge transmission methods into programs that develop learners who can use AI environments while making decisions and practicing ethical reasoning. Society 5.0 differs from other visions because it advocates human-AI harmony without embracing either technological utopianism or automated dystopia. The realization of this vision depends heavily on education, which needs to establish governance and curricular frameworks that emphasize interpretability and pluralism alongside cognitive sovereignty. 3.2. Key Characteristics of Society 5.0 The philosophical foundation of Society 5.0 combines technological optimism with social responsibility. Five core characteristics define this model and guide its application across societal domains [1,2]: Human-Centric Innovation: Society 5.0 prioritizes human needs over market efficiency. AI tools are designed for empathetic communication and inclusive hiring, supporting well-being and social equity; Cyber-Physical Integration: The fusion of cyberspace with the physical world enables AI-powered systems such as collaborative robots and wearable IoT devices that enhance workplace safety and preserve human oversight; Sustainability and Inclusivity: Equitable access to digital infrastructure is a central goal. AI and IoT technologies support marginalized communities in urban agriculture and remote work through accessible, inclusive platforms; Data-Driven Decision-Making: Big data analytics guide targeted interventions in education and workforce development, helping institutions respond to future employment challenges; and Decentralized Knowledge and Collaboration: Platform-based models and blockchain technologies empower individuals through credential verification, transparent compensation, and ethical, worker-owned ecosystems (Figure 2). Together, these characteristics articulate a vision where intelligent systems reinforce human dignity, autonomy, and shared progress. 3.3. Socio-Technical Systems (STS) Theory The Socio-Technical Systems (STS) theory establishes a fundamental framework for analyzing how artificial intelligence transforms educational systems (Figure 3). According to STS, technology exists as part of cultural, institutional, and human systems, and develops through its evolution [4]. The educational application of AI extends beyond a mere tool, gradually becoming essential for transforming knowledge structures, teaching practices, and student learning engagement. Orlikowski [3] demonstrated that technology interacts with social systems through a continuous feedback process that transforms both the technology and the social structures. The introduction of AI in classrooms starts with supporting functions such as assessment automation and content delivery, yet produces profound changes in teaching authority and student learning independence. A comprehensive understanding of these changes requires more than technical knowledge, as it also demands awareness of the cognitive, ethical, and cultural factors that interact with technology. Science and Technology Studies (STS) offer an effective approach to counter technological determinism by supporting human–AI collaborative design processes. Educational technology development should occur through collaborative efforts between humans and AI systems while maintaining focus on local values and specific learning environments. The co-development process is essential for AI systems, as it shapes how learners interpret concepts such as knowledge, evidence, and truth. The STS framework enables ethical and context-sensitive implementation of AI in education. 3.4. The Cognitive-AI Interaction Framework (CAIF) The Cognitive-AI Interaction Framework (CAIF) is based on STS theory to describe three distinct ways AI interacts with knowledge and learning systems: Epistemic Anchoring: Educational knowledge verification and institutional trust are supported by AI tools. Examples include blockchain-based credentialing, semantic citation validation, and plagiarism detection systems [6]. The systems ensure content authenticity but need to stop students from depending too heavily on machine verification systems [7]. Cognitive Mediation Systems: Adaptive learning environments, AI tutors, and recommendation engines personalize instructional pathways to support individualized student learning [8]. Although these educational tools enhance student outcomes, they simultaneously minimize intellectual diversity and generate algorithmic dependencies. Educators must ensure interpretive agency through complete transparency, together with human supervision and diverse pedagogical approaches. Synthetic Knowledge Environments: AI-created materials combined with computer-generated characters and virtual educational simulations create interactive learning scenarios. The educational tools open up new teaching methods, but they make it harder to distinguish between authentic and fabricated content. Students tend to accept artificial representations without question due to the lack of epistemic labeling and critical reflection protocols [9]. The modalities operate as both a diagnostic and design framework, which helps educational professionals and institutions understand the implications of AI on knowledge development and educational ethics. CAIF provides an analytical approach to predict and direct educational evolution in AI-driven societies, though it does not specify a single solution. The analysis of technological form in relation to epistemic function enables researchers to differentiate between AI systems that assist learning from those that distort meaning. These theoretical foundations provide the basis for the following results, which summarize the CAIF framework’s core modalities and their implications for AI-integrated education.

![Figure 3: The impact of new technology on complex sociotechnical systems (adapted from van Engelen) [5].](/uploads/source/articles/computingai-connect/2025/volume2/20250023/image003.png)

4.1. Global Ethical Anchors for Human-Centric AI in Education The increasing integration of AI systems into educational structures requires stronger ethical frameworks that respect cultural diversity. Society 5.0 originated as a Japanese philosophical concept rooted in the values of ikigai and wa [1]; however, its global adoption necessitates an ethical framework that extends beyond Japan. The governance of AI in education requires multiple global perspectives that value human dignity together with collective knowledge autonomy. This section provides a brief analysis of five regional ethical systems, from Africa, Oceania, Asia, the Americas, and Europe, to assess their influence on the design and deployment of CAIF modalities. Ubuntu (Sub-Saharan Africa): Ubuntu represents a key relational philosophy in sub-Saharan Africa, which states, “I am because we are”. This approach emphasizes the importance of interdependence along with community and shared knowledge production responsibilities [10]. Through education, Ubuntu challenges learning personalization that creates individual learning isolation and breaks down collaborative knowledge systems. According to Higgs [11], Ubuntu-based ethics reject individualistic Western approaches by promoting communal values, collective intelligence, and dialogical participation in education. Ubuntu matches the principles of cognitive mediation systems within the CAIF framework by supporting the development of AI tools that promote peer learning and social knowledge scaffolding, and co-engagement activities. Kaitiakitanga (Oceania-Māori Worldview): In Māori thought, Kaitiakitanga refers to stewardship and guardianship, particularly over cultural and environmental resources [12]. Applied to AI in education, this concept insists that knowledge must be preserved with reverence, especially when it concerns endangered languages, indigenous epistemologies, or oral traditions. Kaitiakitanga emphasizes the importance of epistemic anchoring, ensuring that AI systems do not extract or commodify knowledge, but instead serve as stewards of cultural continuity [13]. AI-driven archival systems, for example, can be designed to preserve community ownership over data and embed indigenous consent protocols into content generation. Confucian and Buddhist Ethics (East and Southeast Asia): Philosophical systems in East and Southeast Asia emphasize ethical self-cultivation, interdependence, and social harmony. Confucian ethics, particularly the value of li (ritual propriety), prioritize structured moral development and relational responsibility in learning [14]. Buddhist thought adds a contemplative dimension, promoting mindfulness, non-attachment, and moral discernment [15]. These frameworks challenge the techno-solutionism often seen in AI-based education and argue for the preservation of pedagogical intentionality. In CAIF terms, this maps onto cognitive mediation systems, where AI systems must support—not manipulate—learner reflection, autonomy, and moral reasoning. Buen Vivir (Latin America): Buen Vivir (or Sumak Kawsay), rooted in Andean cosmologies, emphasizes holistic well-being, reciprocity, and harmony with the natural world [16]. It critiques linear, industrial notions of development and advocates for culturally situated and ecologically balanced knowledge systems. When applied to AI in education, Buen Vivir problematizes Synthetic Knowledge Environments that are disconnected from learners’ lived experiences or that marginalize indigenous perspectives. AI-generated simulations and content must be grounded in contextual relevance and moral depth. As Walsh [17] explains, digital technologies in Latin America must be reoriented to serve epistemic justice and community-based validation. Human Rights Tradition (Europe and Global Institutions): The Human Rights tradition, which emerged from Enlightenment philosophy and post-war global institutions, emphasizes equality and freedom of expression and access to information. The UNESCO Recommendation on the Ethics of Artificial Intelligence [18] demonstrates this tradition by establishing obligations for transparency, non-discrimination, and inclusive access. These principles apply across all CAIF modalities—particularly the principles function to regulate algorithmic bias and protect learner data while ensuring explainability. To clarify their relevance across the framework, Figure 4 summarizes the core principles of each ethical tradition and how they align with the modalities of the CAIF. The application of AI systems requires compliance with legal standards as well as epistemically just principles to allow learners to develop their own independent understanding. The ethical anchors operate as a system to develop a multicultural epistemic framework, which leads the governance and development of AI in education. The philosophical framework of CAIF modalities is grounded in these principles, which ensure that AI tools fulfill the ethical, cognitive, and cultural requirements of learners across diverse backgrounds. Educational systems can transition from technological advancement to moral development. The integration of values into policy and pedagogy, and platform design leads to the development of both smart and wise learners. 4.2. Applying the Cognitive-AI Interaction Framework (CAIF) to Educational Systems The Cognitive-AI Interaction Framework (CAIF) provides a framework to study how artificial intelligence transforms education by changing the verification process of knowledge, and the experience of knowledge and trust in knowledge. This section applies the three CAIF modalities (Epistemic Anchoring, Cognitive Mediation Systems, Synthetic Knowledge Environments) to educational practices, with attention to curriculum, pedagogy, assessment, and governance. While Section 3.4 outlined the foundational principles and theoretical architecture of CAIF, the following section applies the framework to concrete educational processes, including curriculum, pedagogy, and assessment. 4.2.1. Safeguarding Knowledge Provenance with Epistemic Anchoring Artificial Intelligence systems performing epistemic anchoring verify the trustworthiness of information along with its origin. The widespread adoption of AI-generated content creates new risks for misinformation, fake academic outputs, and incorrect citations. Institutions need to implement semantic citation validators and AI-detection systems and blockchain-based credentialing platforms [6,7] to support epistemic integrity in their academic programs. The combination of AI-detection systems with blockchain-based transcript verification allows student references to be checked against academic databases while preventing fraudulent credentials and enabling worldwide transcript transfer. These technologies help build trust regarding the genuine nature of academic products. These systems must complement human judgment. Educators should assist students in contextualizing AI outputs and developing epistemic literacy skills, including the ability to assess the reliability of sources. The excessive use of verification automation could result in interpretive passivity because students might follow system outputs without performing proper critical evaluation. The implementation of human-in-the-loop design is essential for epistemic anchoring, as machine logic serves to support reflective reasoning while ensuring human oversight. 4.2.2. Structuring Personalization and Pedagogical Flow in Cognitive Mediation Systems The term cognitive mediation systems describe artificial intelligence platforms that provide learning content management through adaptation or recommendation features. The field of adaptive assessments functions alongside recommendation engines and intelligent tutoring systems. These tools provide the best results by boosting student interest while providing instant feedback and enabling customized learning approaches [12,19]. Mediation introduces a range of potential risks. The implementation of algorithmic personalization in education can result in intellectual narrowing, as students are repeatedly exposed to similar content, reinforcing cognitive biases and limiting their capacity to explore new concepts [20]. A learner who interacts only with AI-curated readings on a theory might miss out on different or opposing perspectives. Systems need to adopt pluralistic design principles to incorporate multiple perspectives and contradictory epistemological viewpoints. Education professionals must retain the authority to modify system recommendations while guiding students beyond algorithmically generated content. Interpretive dependency presents an additional risk because students tend to consider AI outputs as absolute facts, particularly when responses carry authoritative tones. AI systems require features that explain recommendation rationales to users, and students need education about analyzing AI decision-making processes. Teacher training should treat cognitive mediation as an educational partnership that requires human involvement instead of automated processes. Instructors need to recognize how learning algorithms modify student educational paths, and they must know when to intervene with their own evaluation during key instructional and assessment phases. 4.2.3. Navigating Synthetic Pedagogy in Synthetic Knowledge Environments Synthetic knowledge environments consist of AI-produced interactive learning environments, which include virtual laboratories, historical avatar interfaces, and synthetic storytelling features to boost student involvement and practical learning experiences. Through these tools, students can now experience reconstructed historical cities and interact with AI avatars of important thinkers [9]. Simulations add depth to educational experiences, yet they make it challenging to distinguish between authentic content and simulated material. Learners tend to mistake simulated content for genuine historical facts and academic consensus if the simulated experience lacks proper critical guidance. Humanities subjects face significant challenges because interpretation alongside context serves as their foundation. The educational application of simulation requires three essential transparency protocols: (a) the simulation must include a distinct label indicating it is a synthetic creation; (b) the system must show both the sources used for creation and the algorithms that guide its design; and (c) students need proper guidance to evaluate the content selection and omission in the representation. A virtual trial simulation about history should contain marginalized perspectives unless designers make a conscious effort to include them. The improper implementation of AI perpetuates dominant narratives while silencing marginalized voices. Educational use of simulated experiences requires students to participate in interpretive reflection tasks that examine the foundational assumptions of the simulation, evaluate different representation perspectives, and assess ethical implications of avatar use. The activities help students understand simulations better and recognize them as discursive instruments instead of passive displays. The implementation of simulated environments creates new problems related to accessibility and fairness. Virtual learning systems with advanced features need a strong infrastructure to operate properly, which creates challenges for schools with limited resources. Educational institutions need to develop such tools inclusively through open-source models that provide localization capabilities and accessible versions to avoid worsening digital inequities. The three CAIF modalities provide an organized framework for educational institutions to implement artificial intelligence systems. The method of epistemic anchoring guarantees the truth-value of knowledge, while cognitive mediation systems deliver personalized education with strict oversight needs, and synthetic knowledge environments require ethical oversight to properly analyze their new experiences. Educators need to approach AI by combining resistance with critical evaluation to confirm that new systems fulfill educational objectives, which include understanding and interpretation, and meaning-making. These applied insights now lead to a broader conceptual reflection, situating CAIF within existing literature and outlining future directions for empirical validation and institutional adaptation.

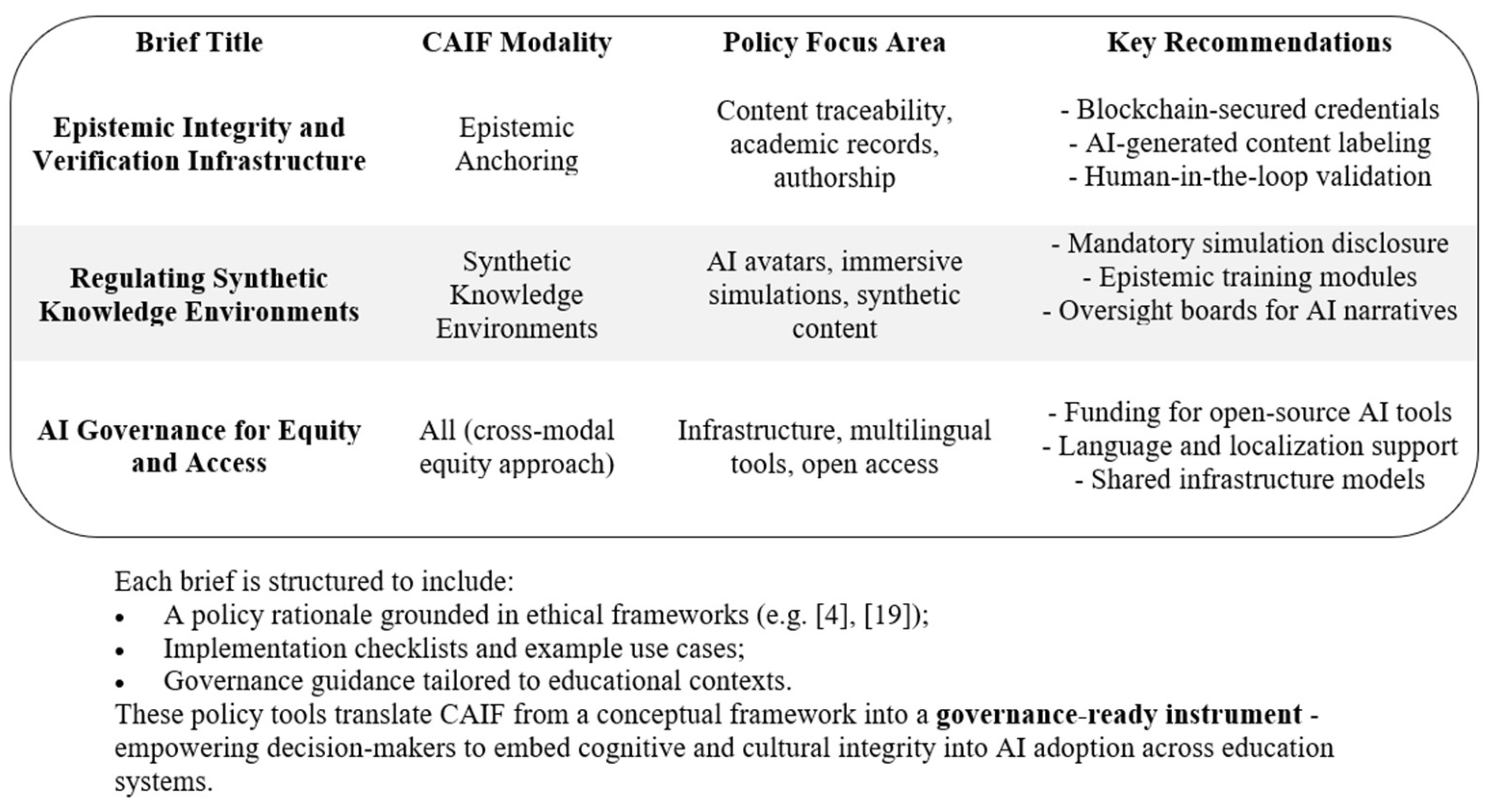

5.1. Governance and Policy Implications for Society 5.0 Education Educational governance must establish new methods to handle the technical aspects and intellectual consequences of AI systems when integrating them into educational infrastructure. The current ethical governance system, which relies on abstract principles and delayed monitoring, no longer meets present needs. A new governance model is required—one that anticipates problems and engages stakeholders through principles of cognitive integrity. AI systems in Society 5.0 educational ecosystems should operate as collaborative meaning-makers instead of independent authorities while maintaining responsibility to human teachers, students, and their communities. CAIF functions as a base model to demonstrate how AI affects knowledge through its three distinct processes: epistemic anchoring, cognitive mediation systems, and synthetic knowledge environments. Ensuring knowledge integrity in AI-driven education requires distinct governance approaches for each modality. Transparency Protocols and Epistemic Labeling: All educational institutions must establish mandatory transparency standards that apply to all AI systems, which include content generation and assessment, and recommendation systems. These requirements align with international policy directives, such as the European Commission’s guidelines for trustworthy AI in education, which emphasize algorithmic transparency, accountability, and explainability in educational tools [21]. Educational platforms must label AI-generated content while maintaining records of algorithmic decisions that impact learner outcomes and developing standards for epistemic metadata that display authorship information together with source credibility and interpretive scope. Such practices are essential for maintaining epistemic anchoring and preventing the normalization of synthetic content as factually equivalent to human-authored knowledge. Human-AI Co-Education Policies: Policy implementation must ensure that educators retain the authority to interpret the outputs of AI systems. The following rights belong to educational staff members: (a) the educational system must allow human instructors to reject AI-generated curriculum and assessment recommendations; (b) the system should enable educators to stop automated feedback systems that lead to student misdirection or demotivation; and (c) educators must apply their judgment to place AI-generated insights in a proper context. Cognitive mediation systems need constant monitoring by educators who must learn both AI system operation and output evaluation methods. The implementation of AI systems should prevent educational inequalities from becoming more pronounced. Public institutions and national organizations need to establish policies that provide AI tools to students regardless of their location or financial status or language abilities. AI systems require training data that include diverse collections encompassing multiple epistemological perspectives, as well as various linguistic and cultural knowledge domains. Synthetic knowledge environments will maintain dominant narratives unless deliberate steps are implemented to incorporate diverse epistemic content. The verification process and external auditing function need to be implemented. The establishment of effective AI governance requires verification systems that operate through independent third-party verification structures. Third-party audit mechanisms should assess educational AI systems based on their fairness level as well as their transparency and epistemic reliability. The audits should evaluate system performance data beyond performance metrics by assessing interpretive fidelity and cognitive bias elimination, and learner agency safeguards. Blockchain technology enables the verification of educational credentials, safeguarding student achievements through secure, transferable, and tamper-proof systems. Policy Literacy and Participatory Design: The governance process needs to become more democratic. Educational systems require the collaboration of stakeholders such as educators, learners, parents, and community leaders in the design, implementation, and assessment of AI tools. Educational institutions should integrate AI policy literacy into training programs for teachers and school leadership programs, and curriculum development to establish AI policy literacy. Co-design approaches enhance the relevance of AI tools while building institutional trust and protecting interpretive autonomy. Society 5.0 governance serves a purpose that goes beyond technical control because it enables the collective development of knowledge systems. Through the CAIF framework, institutions can shift from regulatory responses to design-oriented approaches, which transform AI into an educational partner rather than an authority seeking control. 5.2. Strategic Outputs for Educational Futures The successful implementation of the Cognitive-AI Interaction Framework (CAIF) in educational institutions demands both practical implementation tools and a clear conceptual understanding from educational professionals and policymakers. Strategic outputs help maintain the principles of epistemic integrity and human-centered equitable learning during AI implementation. This section introduces two key initiatives: a Global Declaration for value alignment and a Policy Briefing Series for local implementation. 5.2.1. Declaration for Human-Centered AI in Education A worldwide endorsement of a declaration would define essential ethical guidelines for incorporating artificial intelligence in educational settings. The declaration should incorporate ethical principles that resemble the UNESCO Recommendation on the Ethics of Artificial Intelligence [21] and the AI4People guidelines [22]. This declaration should ensure: (a) the protection of cognitive integrity requires maintaining epistemic transparency while allowing learner autonomy to maintain knowledge provenance; (b) the declaration should embrace epistemic diversity through Ubuntu along with Kaitiakitanga and Buen Vivir principles [10,12,16]; (c) the development of inclusive governance models needs to include teachers alongside learners and their communities (Figure 5). The declaration serves as a normative framework that enables educational institutions to reform their curriculum and platforms as well as develop policies aligned with AI integration. Figure 4 contains a draft declaration that institutions can adapt. 5.2.2. AI in Education Policy Briefing Series A modular Policy Briefing Series (Figure 6) is proposed to assist decision-making at both national ministry and educational leadership levels. These briefs transform CAIF into governance-friendly tools by analyzing major policy domains and using ethical frameworks to provide recommendations. Each brief is designed to assist both pilot programs and national implementations. Proposed briefs include: The briefs provide adaptable structures for different regions and systems, including templates, checklists, and policy metrics. The transformation of CAIF into an inclusive governance system and educational foresight framework occurs through these briefs.

The primary issue is no longer whether to integrate AI, but how to implement it responsibly. This paper has demonstrated that effective integration requires attention not only to functional aspects but also to the epistemic and ethical implications of AI. Education within Society 5.0 needs to safeguard both the availability of knowledge and the complete integrity of interpretation and meaning [1,2]. The Cognitive-AI Interaction Framework (CAIF) serves as an evaluation and guidance system for assessing AI’s effects on learning processes. CAIF establishes a connection between AI integration through its three modalities (Epistemic Anchoring, Cognitive Mediation Systems, and Synthetic Knowledge Environments) to examine knowledge production and experience processes. The framework establishes a connection between system design elements, educational objectives, and cognitive learning outcomes. The framework bases its principles on worldwide ethical traditions, including Ubuntu and Kaitiakitanga and Confucian thought and Buen Vivir, and Human Rights, to establish that Society 5.0 education needs to preserve its pluralistic and interpretive, and human-centered approach [10,16,18]. The traditions support CAIF’s recommendation for technology systems that work with established values instead of replacing them. The strategic outputs, which include policy tools, curricular reforms, and literacy programs, show how CAIF can transition from theoretical concepts to practical applications. The outputs help educational professionals and policy-makers safeguard both the content of student learning and students’ abilities to understand, critically evaluate, and contextualize information. Education’s future development will result from our intentional design choices rather than AI’s intellectual capabilities. Through frameworks like CAIF, we can establish AI as an augmentation tool that preserves educational domains of ethical inquiry and epistemic freedom.

| AI | Artificial Intelligence |

| CAIF | Cognitive-AI Interaction Framework |

| IoT | Internet of Things |

| STS | Socio-Technical Systems |

The author confirms that he was solely responsible for the conception, design, analysis, interpretation, drafting, visualization, and final approval of the article.

This is a theoretical manuscript. No empirical data were generated or analyzed. All referenced materials are publicly accessible through the cited sources.

No consent for publication is required, as the manuscript does not involve any individual personal data, images, videos, or other materials that would necessitate consent.

The author declares no conflicts of interest regarding this manuscript.

The study did not receive any external funding and was conducted using only institutional resources.

The author acknowledges the institutional support of Abu Dhabi University.

[1] H. Deguchi, Y. Hirai, and S. Muto Society 5.0: A People-Centric Super-Smart Society Springer: Berlin/Heidelberg, Germany, 2020. [CrossRef]

[2] L. Floridi and J. Cowls, “A unified framework of five principles for AI in society,” Harvard Data Sci. Rev., vol. 1, no. 1, 2019. [CrossRef]

[3] W. J. Orlikowski, “The duality of technology: Rethinking the concept of technology in organizations,” Organ. Sci., vol. 3, no. 3, pp. 398–427, 1992. [CrossRef]

[4] A. Oosthuizen and L. Pretorius, “Systemic change and socio-technical systems thinking in education,” S. Afr. J. Educ., vol. 36, no. 3, pp. 1–10, 2016. [CrossRef]

[5] Y. van Engelen, “Explaining Socio-Technical Systems,”. (2020) [Online]. Available: https://yaelvengelen.medium.com/explaining-socio-technical-systems-94993a68948c.

[6] A. Grech and A. F. Camilleri Blockchain in Education Joint Res. Centre, European Commission: Brussels, Belgium, 2017. [CrossRef]

[7] L. Floridi and J. Cowls, “A unified framework of five principles for AI in society,” Harvard Data Sci. Rev., vol. 1, no. 1, 2019. [CrossRef]

[8] K. Holstein, J. Wortman Vaughan, H. Daumé, III, M. Dudik, and H. Wallach, Improving fairness in machine learning systems: What do industry practitioners need? 2019. [CrossRef]

[9] S. M. West and J. R. Allen, “Deepfakes and education: The coming crisis of trust,”. (2021) Brookings Inst. [Online]. Available: https://www.brookings.edu.

[10] T. Metz, “Ubuntu as a moral theory and Human Rights in South Africa,” Afr. Hum. Rights Law J., vol. 22, no. 1, pp. 1–17, 2022. Available: https://scielo.org.za/pdf/ahrlj/v11n2/11.pdf.

[11] P. Higgs, “Ubuntu and the politics of communalism in South African education,” Educ. Philos. Theory, vol. 44, no. S2, pp. 37–55, 2012. [CrossRef]

[12] J. Hutchings, J. Tipene, and H. Phillips Kaitiakitanga: Māori Perspectives on Conservation Huia Publishers: Wellington, New Zealand, 2012. [CrossRef]

[13] L. T. Smith Decolonizing Methodologies: Research and Indigenous Peoples, 3rd ed.; Zed Books: London, UK, 2021. Available: https://www.bloomsbury.com/us/decolonizing-methodologies-9781786998125/ https://www.bloomsbury.com/us/decolonizing-methodologies-9781786998125/

[14] J. Li, “The Confucian ideal of harmony and its modern interpretations,” Philos. East West, vol. 63, no. 4, pp. 568–582, 2013. [CrossRef]

[15] D. R. Loy Money, Sex, War, Karma: Notes for a Buddhist Revolution Wisdom Publications: New York, NY, USA, 2008. Available: https://wisdomexperience.org/wp-content/uploads/2018/02/Loy-Lesson-3.pdf https://wisdomexperience.org/wp-content/uploads/2018/02/Loy-Lesson-3.pdf

[16] E. Gudynas, “Buen Vivir: Today’s tomorrow,” Dev., vol. 54, no. 4, pp. 441–447, 2011. [CrossRef]

[17] C. E. Walsh, “Development as Buen Vivir: Institutional arrangements and (de)colonial entanglements,” Dev., vol. 53, no. 1, pp. 15–21, 2010. [CrossRef]

[18] UNESCO, “Recommendation on the Ethics of Artificial Intelligence,”. (2021) [Online]. Available: https://unesdoc.unesco.org/ark:/48223/pf0000381137 (Accessed 10 May 2025)..

[19] R. Luckin, W. Holmes, M. Griffiths, and L. B. Forcier Intelligence Unleashed: An Argument for AI in Education Pearson: New York, NY, USA, 2016. Available: https://www.researchgate.net/publication/299561597_Intelligence_Unleashed_An_argument_for_AI_in_Education https://www.researchgate.net/publication/299561597_Intelligence_Unleashed_An_argument_for_AI_in_Education

[20] C. R. Sunstein #Republic: Divided Democracy in the Age of Social Media Princeton Univ. Press: Princeton, NJ, USA, 2017. [CrossRef]

[21] European Commission Ethical Guidelines for Trustworthy AI: Education Sector Applications EU AI Office: Luxembourg, 2024. Available: https://www.europarl.europa.eu/cmsdata/196377/AI%20HLEG_Ethics%20Guidelines%20for%20Trustworthy%20AI.pdf https://www.europarl.europa.eu/cmsdata/196377/AI%20HLEG_Ethics%20Guidelines%20for%20Trustworthy%20AI.pdf

[22] L. Floridi, et al., “AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations,” Minds Mach., vol. 28, no. 4, pp. 689–707, 2018. [CrossRef] [PubMed]

We use cookies to improve your experience on our site. By continuing to use our site, you accept our use of cookies. Learn more